AtomicXR: Capture atomic structures to build 3D in MR.

Introducing AtomicXR

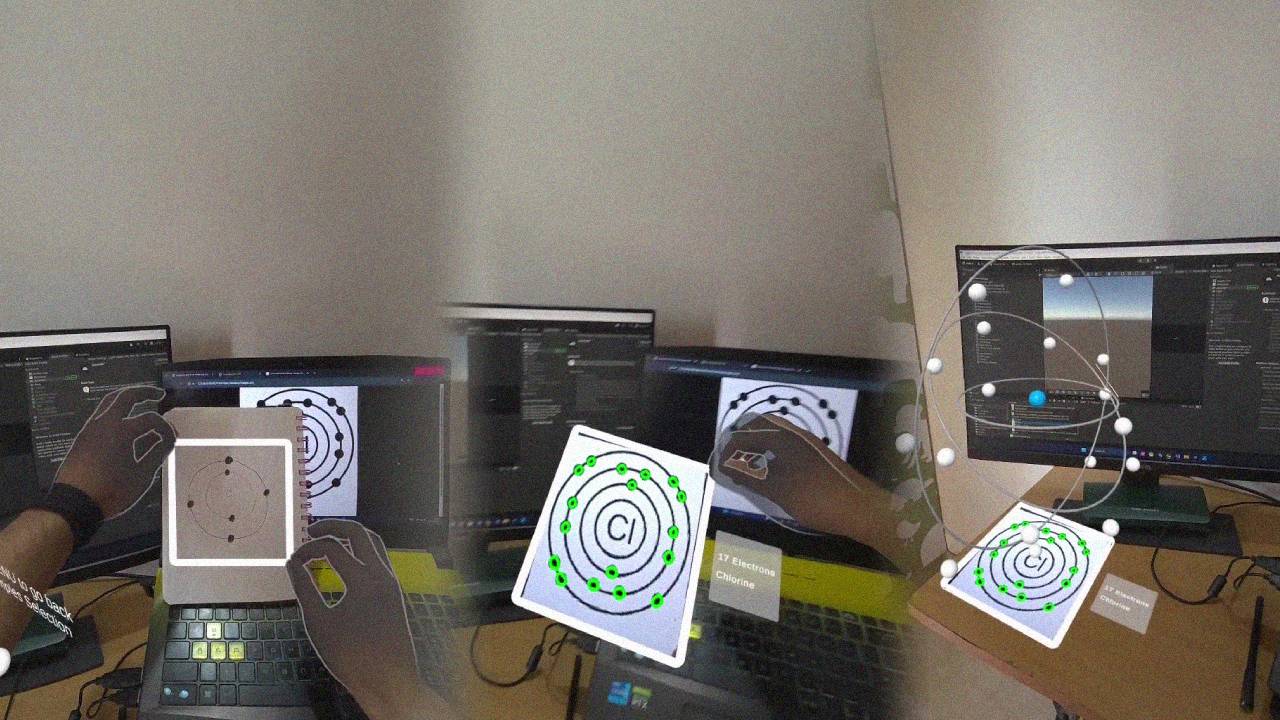

AtomicXR is a Mixed Reality project that combines the Meta Passthrough

Camera API

with the power of OpenCV for

real-time image processing. The concept is simple yet

impactful:

Pinch → Capture → Detect → Generate.

Using a hand gesture (like a pinch and drag), users can capture a sketch or printed

image of an atomic structure. The system then processes the image to detect and

analyze the number of electrons or elements using computer vision techniques. Based

on this detection, a 3D atomic model is generated and visualized right in your

physical space through Mixed Reality.

This project blends spatial interaction with educational visualization — making

complex atomic concepts interactive and easier to understand in real time.

Key Features

- Natural World Capture: A smooth and intuitive interaction system allows users to pinch and drag using hand gestures to capture atomic structure sketches from the real world using the Passthrough camera. This makes the interaction feel natural, creative, and human-centered.

- Advanced Detection Algorithm: The app leverages multiple layers of OpenCV image processing to accurately analyze atomic structure drawings. Through several iterations, it extracts key visual cues — such as the number of electrons — and determines which element the user has drawn.

- Dynamic 3D Structure Generation: Based on the detected electron count, the system generates a 3D atomic model using rings and spheres. Each component is placed and angled correctly to represent the element's atomic structure in 3D space, offering a clear and interactive visualization.

Behind the AtomicXR Development

The concept for this project began back in 2021 as part of my final year college

project. At that time, I was experimenting with Augmented Reality on Android phones

— capturing atomic structure images and analyzing them with OpenCV to generate basic

3D models. (Link to old project)

Later, when the Meta Passthrough

Camera API was released, the idea sparked again. I

realized this could be the perfect opportunity to bring my old project into a more

immersive Mixed Reality environment.

That’s how AtomicXR was borns — a fresh take on the original "Atomic" project, now

rebuilt with hand interactions, spatial understanding, and real-time 3D generation

in MR.

Future Development

While the core concept of AtomicXR works well, I’ve identified a key limitation —

relying solely on OpenCV makes the detection process inconsistent, especially under

varying lighting conditions or with different drawing styles and image types.

To make the system more scalable and robust, I plan to move from traditional image

processing to a custom-trained AI model. I'm also exploring the use of Gemini or

ChatGPT APIs for more intelligent analysis. This shift will not only improve

accuracy but could also provide real-time feedback or suggestions to correct the

drawing — adding a valuable learning layer to the experience.